When I think of the difficulty of regulating technology, I can’t help but think of the General Data Protection Regulation (GDPR), the gift that keeps giving…cookie disclaimers. It is a prime example of how difficult it is for legislators to put regulations around technology.

As we enter the artificial intelligence (AI) era, it’s only natural to wonder if the forthcoming regulations in this realm will bring a similar mix of benefits and annoyances as the GDPR. Will we find ourselves trading cookie disclaimers for some new digital hurdle? Or will we get legislation that makes logical sense and provides appropriate guardrails for this important technology?

Leading the charge from the industry side of things is OpenAI CEO Sam Altman. An affable frontman who spent last month making the rounds in Washington, DC, and testifying in front of Congress, shocking many with his reply to Senator John Kennedy of Louisiana’s question,” You make a lot of money. Do you?” Altman’s unbelievable response was, “I make no… I get paid enough for health insurance. I have no equity in OpenAI.” That is hardly the response you’d expect from a former Silicon Valley venture capitalist. Altman was the head of Y! Combinator, a start-up accelerator used to launch more than 4,000 companies, including Airbnb, Coinbase, Cruise, DoorDash, Dropbox, Instacart, Quora, PagerDuty, Reddit, Stripe and Twitch. This has given many lawmakers a guarded but favorable impression of his motives and ability to provide guidance.

There is a lot of fear and excitement about Artificial Intelligence (AI) right now. Though AI is also drawing many comparisons to nuclear technologies, while vastly different in their natures, they share a striking resemblance in the profound impact they can have on our world. Both hold the promise of significant advancements that could revolutionize various sectors, yet they also carry the potential for misuse with far-reaching, even catastrophic, consequences.

The power of nuclear technology harnessed correctly, has led to breakthroughs in energy production, medical treatments, and scientific research. Similarly, AI, with its ability to learn and adapt, has the potential to transform industries, from healthcare and education to transportation and It’snd. It’s a tool that could redefine the boundaries of what is possible.

However, the same power that makes these technologies so promising also makes them dangerous. Nuclear technology, when misused, can lead to devastating weapons and environmental disasters, as seen in the Chernobyl and Fukushima incidents. AI, too, has a dark side. It could be exploited to create deepfakes, contributing to misinformation and manipulation. It could be used to develop autonomous weapons or intrusive surveillance systems, posing threats to privacy and security.

The dual-use nature of AI and nuclear technologies, applicable for civilian and military purposes, further complicates the matter. This characteristic underscores the urgent need for careful regulation and oversight. Just as the International Atomic Energy Agency was established to promote the peaceful use of nuclear energy and prevent its misuse, there is a growing call for similar regulatory bodies for AI.

Perhaps the most concerning aspect is the risk of uncontrolled advanced AI systems. These systems could act in ways not aligned with human values and interests, leading to unforeseen and potentially catastrophic consequences. This resembles a nuclear meltdown, where the chain reaction spirals out of control, causing widespread destruction.

Hence, this powerful technology is squarely in the sights of regulators whose concerns range from the hyperbolic sci-fi storylines of AI takeovers, as in The Matrix and The Terminator, to more economic issues that hit close to home, like job loss.

Proposed Regulations by Country

Governments worldwide are starting to recognize the need for regulations specific to AI. The European Union has been at the forefront, proposing the AI Act, which seeks to establish a comprehensive legal framework for AI systems. The act addresses ethical and legal concerns surrounding AI, including transparency, accountability, and human oversight.

The AI Act proposes a risk-based approach, classifying AI systems into four categories based on potential harm. The highest-risk systems would face strict regulations, while lower-risk systems would be subject to fewer requirements. This approach aims to balance the need for innovation while safeguarding human rights and ethical considerations.

Similarly, in the United States, Senate Majority Leader Senator Chuck Schumer has introduced a bill proposing the creation of a federal agency dedicated to overseeing AI and ensuring its responsible development and use. The legislation emphasizes transparency, accountability, and promoting public trust in AI technologies.

Will Regulating AI be like Catching Shadows in the Dark

Regulating AI may be like trying to catch shadows in the dark as the technology evolves and adapts faster than regulatory measures can keep pace. While proposed regulations are a step in the right direction, crafting effective legislation poses significant challenges.

One major obstacle is the rapid pace of technological advancements, making it difficult for regulations to keep up with the evolving capabilities of AI. Striking a balance between fostering innovation and addressing ethical concerns requires careful consideration and ongoing collaboration between policymakers, AI developers, and other stakeholders.

Even then, there are challenges in enforcing those regulations. How can the government know what goes on behind closed data center doors? How would you discern the difference between an AI-generated image and one generated by an Adobe Photoshop virtuoso? How likely would organizations be about the source of their work?

Under traditional copyright law, humans are considered the authors of creative works. However, with AI-generated content, the lines blur. Can an AI system be considered the author if it autonomously generates a piece of art or music? Conversely, can the developer or owner of the AI system claim ownership? These questions highlight the need for updated copyright laws to address the unique challenges AI-generated content poses.

Responding to requests from Congress, members of the public, creators, and AI users, the Copyright Office is delving into the copyright and registration implications arising from AI-generated content. Recognizing the need to address the copyrightability and registration challenges associated with these works, the Office is rolling out fresh registration guidance.

This guidance emphasizes that applicants must disclose the presence of AI-generated content when submitting works for registration. It outlines the procedures for making such disclosures, updating pending applications, and rectifying copyright claims already registered without the mandated disclosure, thereby ensuring accuracy in the public record. The US Copyright Office provided the Copyright Registration Guidance: Works Containing Material Generated by Artificial Intelligence in March 2023:

As the agency overseeing the copyright registration system, the Office has extensive experience in evaluating works submitted for registration that contain human authorship combined with uncopyrightable material, including material generated by or with the assistance of technology. It begins by asking “whether the ‘work’ is basically one of human authorship, with the computer [or other device] merely being an assisting instrument, or whether the traditional elements of authorship in the work (literary, artistic, or musical expression or elements of selection, arrangement, etc.) were actually conceived and executed not by man but by a machine.” In the case of works containing AI-generated material, the Office will consider whether the AI contributions are the result of “mechanical reproduction” or instead of an author’s “own original mental conception, to which [the author] gave visible form.” The answer will depend on the circumstances, particularly how the AI tool operates and how it was used to create the final work. This is necessarily a case-by case inquiry. If a work’s traditional elements of authorship were produced by a machine, the work lacks human authorship and the Office will not register it. For example, when an AI technology receives solely a prompt from a human and produces complex written, visual, or musical works in response, the “traditional elements of authorship” are determined and executed by the technology—not the human user. Based on the Office’s understanding of the generative AI technologies currently available, users do not exercise ultimate creative control over how such systems interpret prompts and generate material. Instead, these prompts function more like instructions to a commissioned artist— they identify what the prompter wishes to have depicted, but the machine determines how those instructions are implemented in its output. For example, if a user instructs a text generating technology to “write a poem about copyright law in the style of William Shakespeare,” she can expect the system to generate text that is recognizable as a poem, mentions copyright, and resembles Shakespeare’s style. But the technology will decide the rhyming pattern, the words in each line, and the structure of the text. When an AI technology determines the expressive elements of its output, the generated material is not the product of human authorship. As a result, that material is not protected by copyright and must be disclaimed in a registration application.

Legal Takeaways for Businesses Using AI

Addressing the legal and regulatory concerns surrounding its development and use is imperative as AI increasingly integrates into our lives. Copyright and trademark issues related to AI-generated content necessitate updated laws that recognize the unique challenges posed by AI.

Crafting effective regulations requires striking a balance between innovation and ethical considerations. Collaboration between policymakers, AI developers, and other stakeholders is essential to navigate the evolving landscape of AI. Ultimately, a global approach to regulation is necessary to ensure responsible AI development and maximize its potential benefits while safeguarding human rights and societal well-being.

Overall there appears to be plenty of upside with AI for businesses, but areas I’d be looking at in the near term include the following:

- Generation of IP – If you generate a logo you wish to trademark or copyright a piece of writing or code, keep in mind the guidance of the USPTO and Copyright offices as to what would be protected

- Data Privacy – This one has so many dimensions, but one clear-cut one is when you share data with a chatbot like ChatGPT, you may be breaking confidentiality obligations to customers and partners. That data may require

- Accuracy – While chatbots can often provide great results, they also can and will hallucinate, making up facts that sound so compelling at face value that they appear legit. However, a recent incident where a lawyer used ChatGPT to generate a legal brief found himself in hot water when the AI-generated citations were entirely fictional.

Despite these legal considerations, I am very bullish on the prospects of AI. Not only for its productivity gains in the type of knowledge work and marketing that I do but for the potential ways it can improve our world in science and economics.

Tip of the Week: ChatGPT Plugins

First and foremost, the plugin model and UX for ChatGPT plugins are terrible, in my opinion. It’s only a stopgap until OpenAI finds a better way to implement third-party integIt’sons. It’s hard to find the plugins as the ChatGPT Plugin store is not easy to sort and index, and unlike the Apple Store and other plugin directories, there are no ratings from users on the plugins or ways to sort them by category. On top of that, it’s hard to qualify who exactly is providing the plugins. Despite that, there are many useful plugins if you can separate the wheat from the chaff.

To use a plugin, go to the ChatGPT store and add them. Only three can be added to a single chat, so choose wisely.

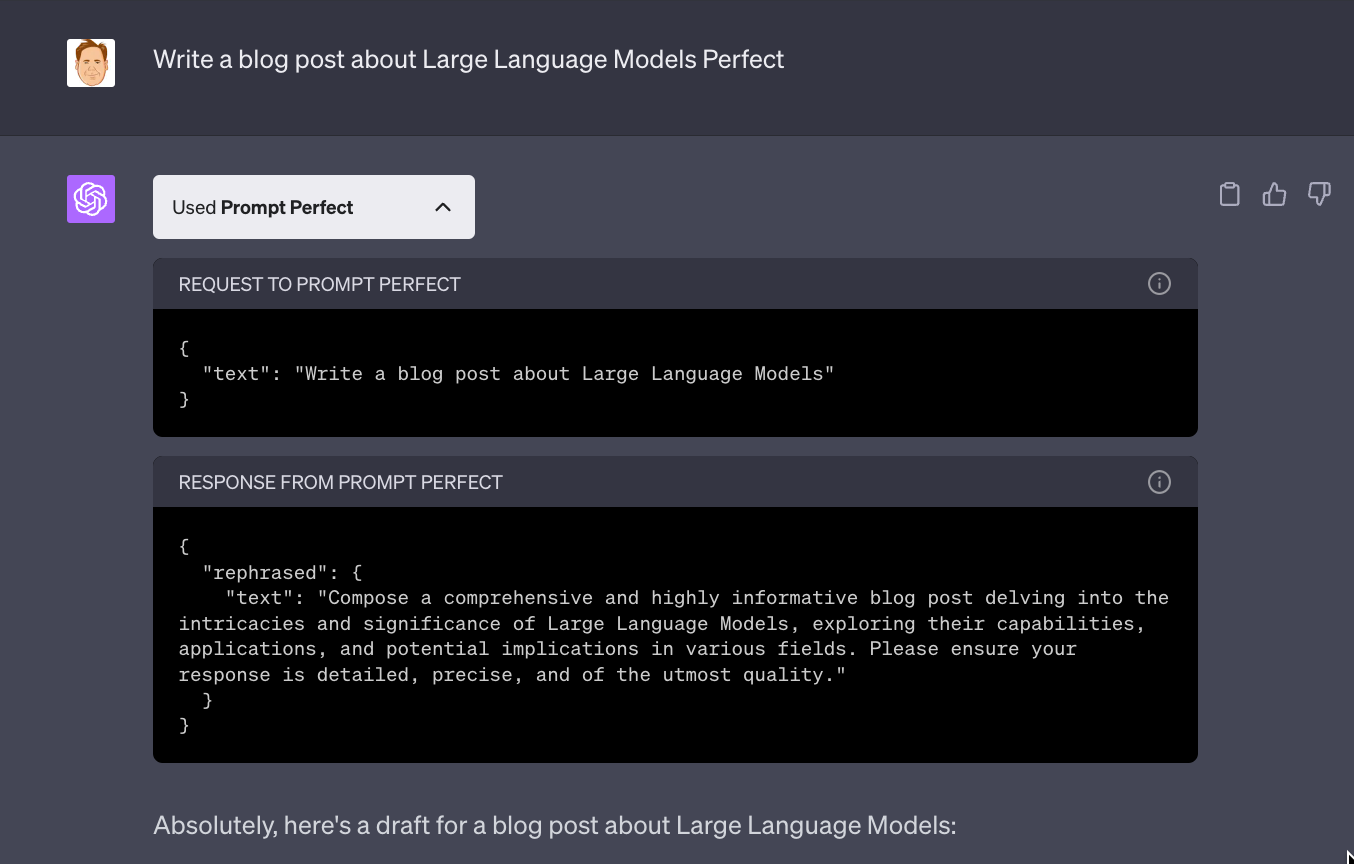

- Perfect Prompt – An exceptional ChatGPT plugin that empowers users to craft perfect prompts for the AI chatbot. Perfect is here to provide assistance if you struggle with creating perfect prompts. Before you enter your prompt, include the word “perfect” at the beginning. As discussed in a previous post, I will tell you it won’t create SuperPrompts, but it will provide a guided way to improve what you are doing today.

- Web Pilot: This tool allows users to provide a URL and optionally requests for interacting with, extracting specific information, or how to deal with the content from the URL. This “s my go-to plugin for extracting data that exceeds the knowledge of the model. You can tell you can benefit from this plugin if you receive the following preamble to a response from ChatGPT:

“As of my knowledge cutoff in September 2021,” - Show Me Diagrams: This plugin is used when people want visualizations when follow-up questions are asked about the diagram, or any modifications are requested. I think this has real promise as it does a good job of accurately taking text and creating graphs in the Mermaid format. In the long term, I’d hope to see the inclusion of a way to format the charts to improve aesthetics. But it’s a strong foundation for text to diagramming, which I think is a huge time-saving for many of us.

What I Read this Week

- Schumer launches ‘all hands on deck’ push to regulate AI”- Washington “ost

- We need to regulate AI. Its creators should lead the way – The Hill

- How Sam Altman Stormed Washington to Set the AI Agenda – The New York Times

- Choosing the Right Vector Index For Your Project – Jesus Rodriguez The Sequence

- Artificial Intelligence, Cryptocurrencies: Regulatory Frenemies Making Peace? -Crypto News

- AI Is Poised to “Revolutionize” Surgery – American College of Surgeons

What I Listened to this Week

https://open.spotify.com/embed/episode/2Bo9RBVo0aZB9nrJszGMXfhttps://open.spotify.com/embed/episode/2CiP3A1ZTcVlaNjFLURioxhttps://open.spotify.com/embed/episode/57wUsyENZVGLIXcVYzmnLK

AI Tools I am Evaluating

- Bardeen – Automate your manual and repetitive tasks using AI, and focus on the ones that matter. They have many use cases, but I think the marketing use cases for AI automation top the list.

- Virtual CMO – Free Virtual CMO for Solopreneurs, solve any marketing problem in 1 minute (No email required). CMO may be a bit of a stretch but nice ideation using AI.

- GPT Engineer – Specify what you want it to build; the AI asks for clarification and then builds it. GPT Engineer is made to be easy to adapt, extend, and make your agent learn how you want your code to look. It generates an entire codebase based on a prompt.

Midjourney Prompt for Newsletter Header Image

For every issue of the Artificially Intelligent Enterprise, I include the MIdjourney prompt I used to create this edition.

Legal issues surrounding artificial intelligence: An intense courtroom scene captures the gravity of the legal battle surrounding artificial intelligence and its implications. A distinguished judge presides over the case, donning a black robe and displaying an air of authority. Lawyers from both sides present compelling arguments, their hands gesturing with conviction. The backdrop reveals a technologically advanced courtroom, with digital screens displaying complex algorithms. The composition employs a wide shot to encompass the entire scene, emphasizing the magnitude of the legal proceedings. The lighting is crisp and evenly distributed, ensuring clarity in capturing facial expressions and body language. The photograph is taken by renowned photographer Annie Leibovitz, who skillfully captures the tension and anticipation in the courtroom. The image reflects the influence of photojournalism, revealing the photographer’s dedication to documenting significant legal events. The photo elicits a sense of intellectual intensity, with the viewer immersed in the intricate legal web. –s 1000 –ar 16:9